Facial coding and K-coefficient

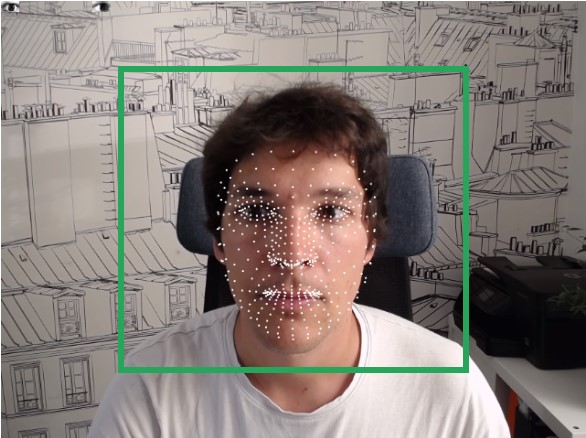

RealEye provides analysis based on facial landmarks using a popular method invented by Paul Ekman - Facial Action Coding System.

Facial Action Coding System (FACS) is a system to taxonomize human facial movements by their appearance on the face, based on a system originally developed by a Swedish anatomist named Carl-Herman Hjortsjö.[1] It was later adopted by Paul Ekman and Wallace V. Friesen, and published in 1978.[2] Ekman, Friesen, and Joseph C. Hager published a significant update to FACS in 2002.

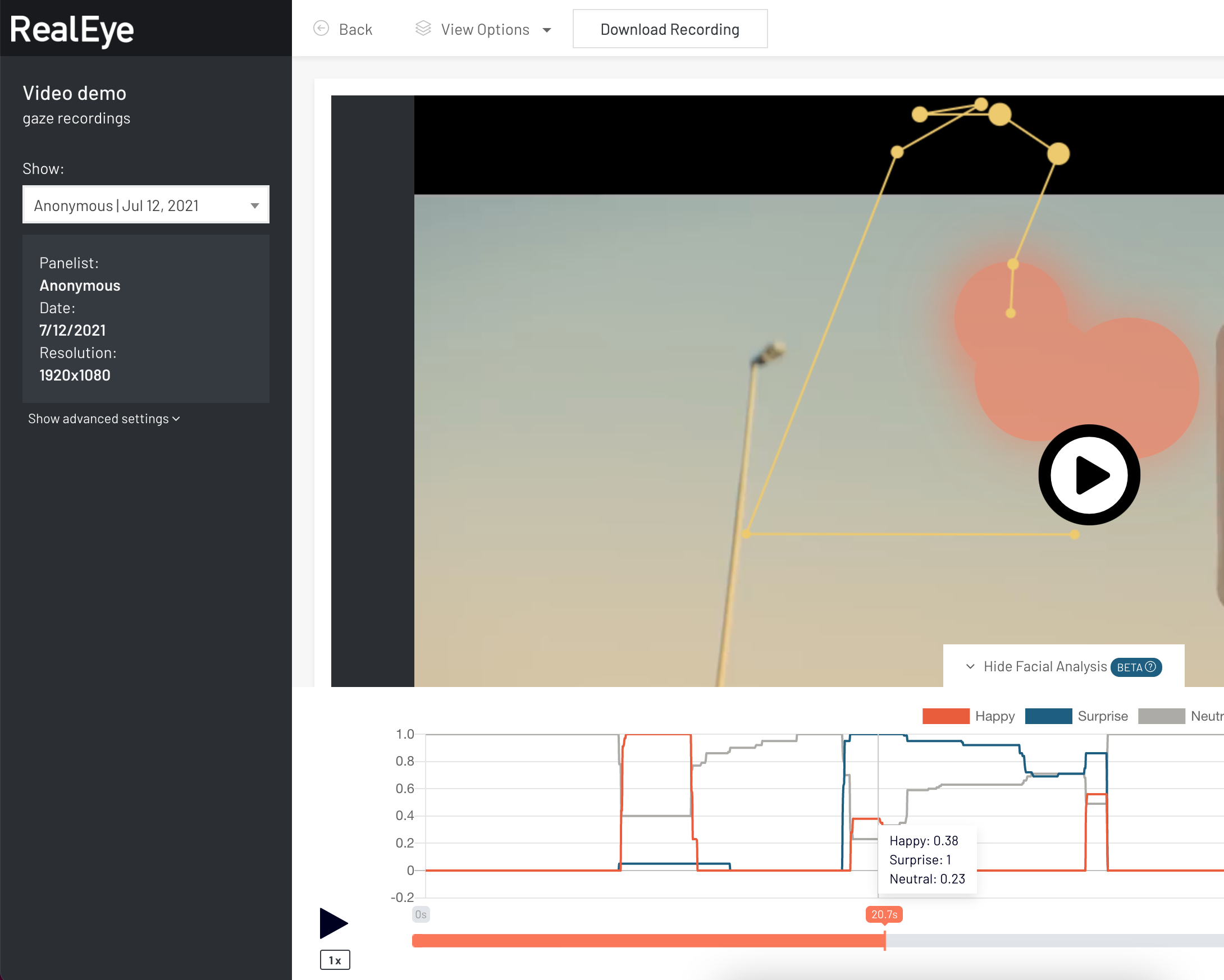

There are 3 emotions available in RealEye:

- happy, based on the cheek raising and the lip corner pulling (so the smile),

- surprise, based on brow-raising, slightly rising the upper lid, and jaw-dropping,

- neutral, based on a lack of lip pulling, lack of eyebrows movement, and lack of mouth opening,

On the same plot, one more parameter is available: K-coefficient and its mean value. This parameter indicates attention level, where:

- K >0 means relatively long fixations followed by short saccade amplitudes, indicating focal processing,

- K < 0 means relatively short fixations followed by relatively long saccades, indicating ambient processing.

Note: On this plot, the K-coefficient parameter is normalized, so its range is from -1 to 1. In the CSV file, values are not normalized.

This parameter was provided based on the following article (available here):

Krzysztof Krejtz, Andrew Duchowski, Izabela Krejtz, Agnieszka Szarkowska, and Agata Kopacz. 2016. Discerning Ambient/Focal Attention with Coefficient K. ACM Trans. Appl. Percept. 13, 3, Article 11 (May 2016), 20 pages. https://doi.org/10.1145/2896452

If you want to learn more about the K-coefficient (how it works and how it can be used), please read the article on our blog.

The data is being collected at ~30Hz rate (assuming the webcam is 30Hz).

The data is available for each participant separately and also in an aggregated way.

Please also remember, that talking during the study can influence facial features, hence it influences emotion reading.

Here you can find a case study with an example of how you can use these additional insights based on the entertainment (happiness) level.

You can also download the emotions data as a CSV file.

NOTE:

RealEye provides "happy", "surprise" and "neutral" emotions plus the Attention analysis. Why don't we provide more emotions?

From our internal tests and discussions, these are the most common to observe and the most important at the same time (at least from our current customer base perspective).

Before implementing it, we did several tests on different platforms for FC (they are all using FACS, https://en.wikipedia.org/wiki/Facial_Action_Coding_System), and we've noticed that in the massive majority of results, only "surprise" and "happiness" graphs showed any movements (there's also a lot of sadness - later about that). People are basically not showing lots of emotions on the laptop screens, and if they are: sadness, anger, fear, or disgust are not the ones we've seen on the data, no matter what the stimuli were.

We dug a bit deeper and realized that the only emotions we could guarantee that are not in the "error zone" are "neutral," "happiness," "sadness," and "surprise." Also, worth underlying that we're performing an individual face calibration for every participant to know the neutral state (not all FC tools are doing so, this is why very often they are decoding sadness too often; google "resting face" to see what I mean). Here's a sample of a happy/neutral advertisement using other FC tools; look at the "Sad" level - this is probably caused by the "resting face" - data like this isn't really usable in our opinion.

So, after all, we've decided to move forward with detecting emotions, but not to provide any uncertain data - narrow them to the ones we're sure about and the ones that are the most commonly detected with the lowest error: "neutral", "happy", and "surprise".

From the technical point of view, we could extend RealEye to capture "sadness".

You can also run RealEye studies without eye-tracking or the facial coding feature.